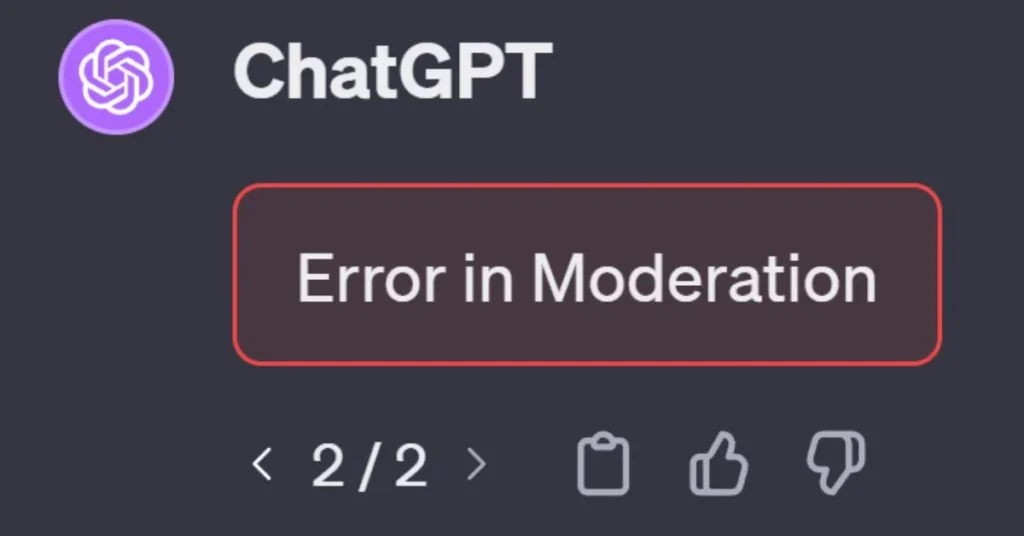

Chatbots have revolutionized the way we interact with technology. From customer service to personal assistants, these AI-driven tools make our lives easier and more efficient. One such advancement is ChatGPT, a powerful language model that can generate human-like text responses. However, as impressive as it is, there are moments when users encounter issues—specifically known as errors in moderation.In this detailed guide we will discuss what is error in moderation chatgpt?

Understanding what causes these errors and how to fix them can enhance your experience with Moderation ChatGPT significantly. If you’ve ever found yourself puzzled by an unexpected response or wondering why certain content was flagged, this article is for you. Let’s dive into the world of Moderation ChatGPT and uncover the common pitfalls while equipping you with practical solutions!

Understanding Moderation ChatGPT

Moderation ChatGPT refers to a specialized function within GPT models designed to ensure that generated content adheres to community guidelines and ethical standards. It plays a crucial role in filtering out harmful or inappropriate responses.

This moderation process leverages advanced algorithms trained on vast datasets. These algorithms help identify and flag language that could be offensive, misleading, or otherwise unacceptable.

Understanding how Moderation ChatGPT operates is essential for users who want effective interactions with the model. The primary goal is not just accuracy but also safety in communication.

By recognizing its capabilities and limitations, users can better navigate conversations while remaining mindful of community norms. This knowledge fosters a more responsible use of AI technology in various applications like customer support or social media engagement.

Common Errors in Moderation ChatGPT

Moderation ChatGPT can experience a range of common errors. One frequent issue is misunderstanding context, which leads to inappropriate responses. This often occurs when the input text lacks clear indicators.

Another problem arises from over-moderation. In this case, ChatGPT might flag or censor benign comments mistakenly, causing frustration for users seeking genuine interaction.

Sometimes, the system fails to recognize sarcasm or humor. This misinterpretation can result in unintended seriousness where lightheartedness was intended.

Additionally, there are instances of technical glitches that disrupt functionality. These may manifest as sluggish performance or unresponsiveness during chats.

Issues with language comprehension can arise across different dialects and slang terms. Such discrepancies hinder effective communication and dilute user experience altogether.

ALSO READ: XRQRES: TRANSFORMING BUSINESS AUTOMATION

How to Fix Error in Moderation ChatGPT

When encountering an error in Moderation ChatGPT, the first step is to identify the type of issue you’re facing. Is it related to filtering inappropriate content, or is it a technical glitch? Understanding the source can help tailor your approach.

Once you’ve pinpointed the problem, clear your cache and refresh your session. This often resolves minor glitches that may cause errors.

If issues persist, review your input parameters. Ensure they align with moderation guidelines. Sometimes fine-tuning prompts can lead ChatGPT to handle requests more accurately.

For persistent problems, consulting documentation for updates and troubleshooting tips is crucial. Engage with community forums where users share their experiences; you might find solutions that have worked for others facing similar challenges.

Don’t hesitate to reach out to support teams if nothing else works. They can provide insights into specific errors you may not be able to resolve independently.

Tips for Using Moderation ChatGPT Effectively

To harness the full potential of Moderation ChatGPT, start with clear guidelines. Define what is acceptable and what crosses the line in your chat environment.

Engage users by setting a friendly tone in interactions. A welcoming approach encourages more honest communication and reduces misunderstandings.

Regularly update your moderation filters based on user feedback. This helps adapt to evolving language trends and new issues that may arise.

Incorporate human oversight for delicate situations. While AI can handle many tasks, a human touch remains invaluable when addressing sensitive topics.

Utilize analytics to track performance over time. Understanding patterns will help refine moderation strategies effectively.

Encourage community involvement by allowing users to report issues or suggest improvements. This fosters trust while enhancing overall experience within the moderated space.

ALSO READ: TANZOHUB: WHERE EVENTS MEET INNOVATION

Alternatives to Moderation ChatGPT

When exploring alternatives to Moderation ChatGPT, several options stand out. Each solution offers unique features that can cater to different needs.

One popular choice is Google’s Perspective API. It analyzes text and provides insights on potential toxicity levels. This tool helps maintain a respectful dialogue without stifling creativity.

Another option is Microsoft’s Azure Content Moderator. It combines machine learning with human oversight, offering image moderation alongside text analysis. This dual approach enhances accuracy while minimizing errors in judgment.

For those seeking community-driven solutions, Discourse offers built-in moderation tools designed for forums and discussion boards. Its user-friendly interface allows easy configuration tailored to specific community guidelines.

Consider using specialized chatbots focused solely on niche topics or industries. They often come equipped with custom moderation settings suited for their environment, ensuring relevant conversations flourish while harmful content gets filtered out effectively.

Conclusion

Chatbots and AI-driven technologies like GPT have transformed how we interact with digital platforms. They provide instant responses, enhance user experience, and streamline communication. However, errors can occur during moderation processes in ChatGPT that may hinder their effectiveness.

Understanding what is error in moderation chatgpt is crucial for both users and developers. These chatbots help filter inappropriate content but are not infallible. Recognizing common errors—like misinterpreting context or failing to flag harmful content—is essential for improving functionality.

Fixing these errors often involves retraining models using diverse datasets, adjusting parameters for better contextual understanding, and implementing more robust filtering protocols. Users must also engage critically with the output to ensure quality interactions.

Using Moderation ChatGPT effectively requires a proactive approach: keeping dialogues clear, providing ample context in queries, and leveraging feedback mechanisms can significantly enhance performance.

For those seeking alternatives to Moderation ChatGPT, there are various other options available—from rule-based systems to human moderators—that might fit specific needs better.

Navigating the complexities of error management in moderation tools can be challenging yet rewarding. Understanding how they work allows users to maximize their potential while minimizing pitfalls associated with automated content moderation systems.

ALSO READ: UNVEILING GPT66X: A NEW ERA IN AI INNOVATION

FAQs

What is an error in Moderation ChatGPT?

An error in Moderation ChatGPT occurs when the system misinterprets context, over-moderates benign content, or encounters technical glitches, leading to incorrect or unexpected responses.

How does Moderation ChatGPT filter inappropriate content?

Moderation ChatGPT uses advanced algorithms trained on extensive datasets to identify and flag harmful or inappropriate language, ensuring communication adheres to community guidelines.

Why might Moderation ChatGPT over-moderate benign content?

Over-moderation can happen if the algorithms are too sensitive or lack contextual understanding, causing the system to flag non-offensive content mistakenly.

How can users improve their interactions with Moderation ChatGPT?

Users can enhance interactions by providing clear and context-rich inputs, regularly updating moderation filters, and engaging in proactive feedback mechanisms to refine system performance.

What are some alternatives to Moderation ChatGPT for content moderation?

Alternatives include Google’s Perspective API, Microsoft’s Azure Content Moderator, and specialized chatbots with custom moderation settings for niche topics or industries.